Kullback Leibler Divergence

Published: 2020-05-24

In this post we will explore the Kullback-Leibler divergence, its properties, and how it relates to Jensen Inequality.

Kullback Leibler (KL) divergence is used to measure the probability distribution difference by taking the expected value of the log-ratio between q(x) and p(x). the formula of KL divergence is given by:

KL(q∣∣p)KL(q∣∣p)=Eq(x)logp(x)q(x)=∫q(x)logp(x)q(x)dx why we need KL divergence? why don’t we just use distance to measure its difference? using KL divergence we can capture the better difference between the distribution.

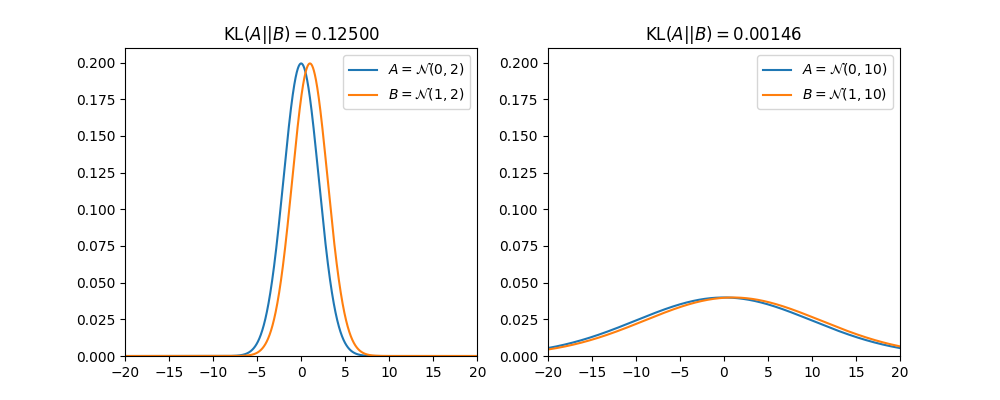

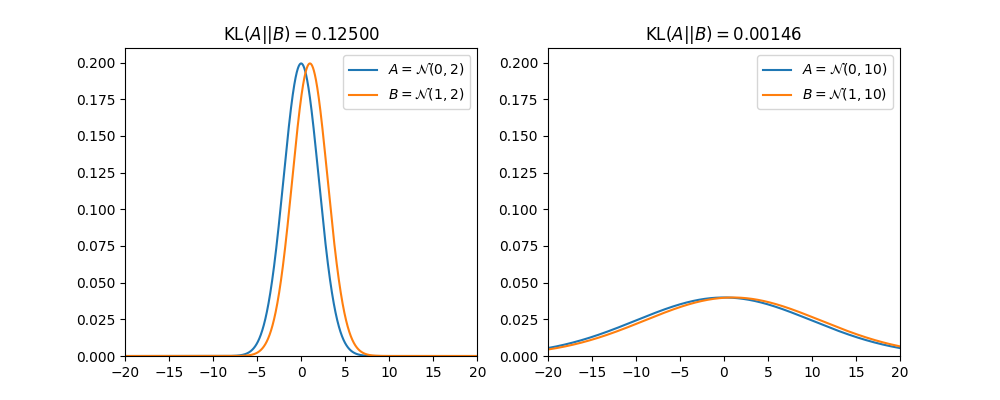

For example, look at this picture of distribution comparisons:

both comparisons have the same parameter distance which is 1. But, graphically we can see that the second comparison is closer than the first one. Using KL divergence, it is shown above that the second comparison “closeness” value is lower than the first one. Now let’s look at the properties of the KL.

Properties of the Kullback Leibler Divergence :

1. KL(q(x)∣∣p(x))2. KL(q(x)∣∣q(x))3. KL(q(x)∣∣p(x))=KL(p(x)∣∣q(x))=0≥0(asymmetric)(non-negativity) The reason for the 1st property is that when we interchange q(x) and p(x) position (expected value change) it becomes different expression so that KL divergence is not symmetrical. that’s also why KL divergence is not called a distance.

the proof for the 2nd property is as follows:

KL(q∣∣q)KL(q∣∣q)KL(q∣∣q)KL(q∣∣q)=∫q(x)logq(x)q(x)dx=∫q(x)log(1)dx=∫q(x)⋅0dx=0 and lastly the proof of the third property is as follows :

−KL(q∣∣p)=Eq(x)−logp(x)q(x)=Eq(x)logq(x)p(x) Using Jensen Inequality:

Eq(x)logq(x)p(x)≤logEq(x)q(x)p(x) in the right hand side we have:

logEq(x)q(x)p(x)=log∫q(x)q(x)p(x)dx=log∫p(x)dx=log(1)=0 back to our inequality we got:

Eq(x)logq(x)p(x)≤0 Because -KL is always negative or equal to zero, thus KL is always positive!

references:

update:

(27-06-2023) Scaling y axis of the figures.